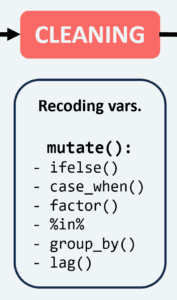

Cleaning longitudinal data is notoriously time-intensive, involving different steps, from importing data to merging and reshaping it. We covered this process in detail in our previous blog post. The next phase focuses on data cleaning, which includes recoding variables, generating new ones, and addressing missing data. Utilizing the synthetic data from our prior post based on Understanding Society, we’ll explore key commands for longitudinal data cleaning, equipping you with the skills to prepare your own data effectively.

Setting up the environment

You can follow up with this guide by running the code in R. We will use synthetic data (i.e., simulated) based on Understanding Society, a large panel study in the UK. You can access the real data for free from the UK Data Archive.

In a previous blog post we merged and reshaped the data in the long format. We will continue to work with this data here. If you want to follow along, you can download the data from here and all the code from here.

Access the code used here.

Access the data: long data.

Before we start, make sure you have the tidyverse and haven packages installed and loaded by running the following code:

install.packages("tidyverse")

install.packages("haven")

library(tidyverse)

library(haven)Although the haven package is not required for importing data; it simplifies working with and recoding data originally imported using it.

Next, we will load the data that we prepared in the previous post.

The data was stored as an “rds” file. We can load it using the read_rds():

usl <- read_rds("./data/usl_syn.rds")And we can have a quick look at it to make sure all is in order:

glimpse(usl)

## Rows: 204,028 ## Columns: 15 ## $ pidp <dbl> 5, 5, 5, 5, 6, 6, 6, 6, 7, 7, 7, 7, 10, 10, 10, 10, 11, 11, … ## $ wave <chr> "1", "2", "3", "4", "1", "2", "3", "4", "1", "2", "3", "4", … ## $ sex <dbl+lbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,… ## $ age <dbl+lbl> 28, 28, 28, 28, 80, 80, 80, 80, 60, 60, 60, 60, 42, 42, … ## $ hiqual <dbl+lbl> 1, 1, 1, 1, 9, 9, 9, 9, 3, 3, 3, 3, 1, 1, 1, 1, 1, 1, 1,… ## $ single <dbl+lbl> 1, 1, 1, 0, 0, 0, 0, 0, 1, NA, NA, NA, 0, NA, … ## $ urban <dbl+lbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, NA, NA, NA, 1, NA, … ## $ vote6 <dbl+lbl> 3, 4, 4, 4, 4, 4, 4, 3, 2, NA, NA, NA, 1, NA, … ## $ fimngrs <dbl+lbl> 3283.87, 4002.50, 3616.67, 850.50, 896.00, 709.00, 7… ## $ sclfsato <dbl+lbl> -9, 6, 2, 1, 6, 6, -8, 3, 2, NA, NA, NA, -9, NA, … ## $ sf12pcs <dbl+lbl> 54.51, 62.98, 56.97, 56.15, 53.93, 46.39, -8.00, 46.16, … ## $ sf12mcs <dbl+lbl> 55.73, 36.22, 60.02, 59.04, 46.48, 45.39, -8.00, 37.02, … ## $ istrtdaty <dbl+lbl> 2009, 2010, 2011, 2012, 2010, 2011, 2012, 2013, 2009, … ## $ sf1 <dbl+lbl> 2, 2, 1, 3, 4, 3, 3, 3, 5, NA, NA, NA, 2, NA, … ## $ present <lgl> TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, NA, NA…

Cleaning categorical variables

To get an intuition about how to clean data, we will start by exploring and recoding categorical variables. Let’s start with the sex variable.

count(usl, sex)

## # A tibble: 2 × 2 ## sex n ## <dbl+lbl> <int> ## 1 1 [male] 92364 ## 2 2 [female] 111664

Because the data was imported from a Stata file with labels, we can check the variable measures and what each category means by using the attributes() command. You can check the data codebook or questionnaire if you do not have this information in your own data.

attributes(usl$sex)

## $label ## [1] "sex" ## ## $format.stata ## [1] "%8.0g" ## ## $labels ## missing inapplicable refusal don't know male female ## -9 -8 -2 -1 1 2 ## ## $class ## [1] "haven_labelled" "vctrs_vctr" "double"

When we have categorical variables, we typically want to code them as factors. This makes it much easier to use in statistical models and graphs. We see that the sex is numerical and not a factor (both in the glimpse() and the count() commands it dbl meaning double or a precise number).

To make things easier to use later on, we can make a new variable called gndr.fct that is a factor with labels for “Man” and “Female”. We use the mutate() command together with factor() to do the recoding. We first input the original variable and then give the labels (should be added from the lowest value to the largest).

usl <- mutate(usl,

gndr.fct = factor(sex,

labels = c("Male", "Female")))After creating new variables, always check that the recoding is correct. We can use the count() command to compare the new variable with the old one:

count(usl, sex, gndr.fct)

## # A tibble: 2 × 3 ## sex gndr.fct n ## <dbl+lbl> <fct> <int> ## 1 1 [male] Male 92364 ## 2 2 [female] Female 111664

We can use a similar approach for the single variable:

count(usl, single)

## # A tibble: 3 × 2 ## single n ## <dbl+lbl> <int> ## 1 0 [No] 96690 ## 2 1 [Yes] 55984 ## 3 NA 51354

usl <- mutate(usl,

single.fct = factor(single,

labels = c("In relationship", "Single")))

count(usl, single, single.fct)## # A tibble: 3 × 3 ## single single.fct n ## <dbl+lbl> <fct> <int> ## 1 0 [No] In relationship 96690 ## 2 1 [Yes] Single 55984 ## 3 NA <NA> 51354

Let’s next look at the “urban” variable:

count(usl, urban)

## # A tibble: 4 × 2 ## urban n ## <dbl+lbl> <int> ## 1 -9 [missing] 47 ## 2 1 [urban area] 119657 ## 3 2 [rural area] 32970 ## 4 NA 51354

We see that we have some negative values. To understand what they stand for, we can again use the attribute() command. You might notice that the negative values are associated with missing data. This is the default in the Understanding Society data.

Another useful command for recoding is case_when(). For this function, we have to give some conditions and the values that the new variables should have if the condition is true (we put “~” between the condition and new values). We can have multiple conditions separated by commas. If some values are not included in any conditions, they will be coded as missing (which appears as NA in R).

We use this command to make a new variable “urban.fct” that becomes “Urban” when the original variable was 1 and “Rural” when it was 2. We then make this new variable a factor using the as.factor() command (note that originally, it became a character or string variable):

usl <- mutate(usl,

urban.fct = case_when(urban == 1 ~ "Urban",

urban == 2 ~ "Rural"),

urban.fct = as.factor(urban.fct))

count(usl, urban, urban.fct)## # A tibble: 4 × 3 ## urban urban.fct n ## <dbl+lbl> <fct> <int> ## 1 -9 [missing] <NA> 47 ## 2 1 [urban area] Urban 119657 ## 3 2 [rural area] Rural 32970 ## 4 NA <NA> 51354

Seems that it worked as expected. We can now move to the next variable, “hiqual”.

count(usl, hiqual)

## # A tibble: 7 × 2 ## hiqual n ## <dbl+lbl> <int> ## 1 -9 [missing] 444 ## 2 1 [Degree] 43932 ## 3 2 [Other higher] 22256 ## 4 3 [A level etc] 38896 ## 5 4 [GCSE etc] 41936 ## 6 5 [Other qual] 20816 ## 7 9 [No qual] 35748

This can be recoded in several different ways. For now, we will create a dichotomous variable that codes people who have a degree versus everyone else. We can use a strategy similar to the one we used before. One difference now is that the condition needs to include multiple values. For this, we use the %in% command. This is used to check if elements of the vector on the left side are present on the right side. If this is true, it will give a TRUE value; if it’s not, it will give a FALSE. For example, if we want to check if “hiqual” has the values 3 to 9, we can use the following command: hiqual %in% 3:9 (3:9 produces all the numbers between 3 and 9). We use this approach together with case_when() to make a factor variable that tells us if someone has a degree or not:

usl <- mutate(usl,

degree.fct = case_when(hiqual %in% 1:2 ~ "Degree",

hiqual %in% 3:9 ~ "No degree"),

degree.fct = as.factor(degree.fct))

count(usl, hiqual, degree.fct)## # A tibble: 7 × 3 ## hiqual degree.fct n ## <dbl+lbl> <fct> <int> ## 1 -9 [missing] <NA> 444 ## 2 1 [Degree] Degree 43932 ## 3 2 [Other higher] Degree 22256 ## 4 3 [A level etc] No degree 38896 ## 5 4 [GCSE etc] No degree 41936 ## 6 5 [Other qual] No degree 20816 ## 7 9 [No qual] No degree 35748

It seems to have worked as expected. We can now move to the next variable, “vote6”.

count(usl, vote6)

## # A tibble: 9 × 2 ## vote6 n ## <dbl+lbl> <int> ## 1 -9 [missing] 264 ## 2 -7 [proxy] 9079 ## 3 -2 [refusal] 114 ## 4 -1 [don't know] 98 ## 5 1 [very] 14638 ## 6 2 [fairly] 49363 ## 7 3 [not very] 41082 ## 8 4 [or not at all interested?] 38036 ## 9 NA 51354

It seems this variable also requires some work. We will use a slightly different strategy here to get the same result. Let’s start by coding first all the negative values as missing. We can use the ifelse() command for this. This takes a condition and then two values: the new value if the condition is true and the new value if the condition is false. We will make a condition that “vote6” is smaller than 0 (that covers all the missing codes). If this is true, we will code it as missing; if not, we will copy the original variable. We will then make a factor version of the variable using the factor() command and giving it the four labels that we saw in the table:

usl <- mutate(usl,

vote6.rec = ifelse(vote6 < 0, NA, vote6),

vote6.fct = factor(vote6.rec,

labels = c("Very",

"Fairly",

"Not very",

"Not at all")))

count(usl, vote6, vote6.fct)## # A tibble: 9 × 3 ## vote6 vote6.fct n ## <dbl+lbl> <fct> <int> ## 1 -9 [missing] <NA> 264 ## 2 -7 [proxy] <NA> 9079 ## 3 -2 [refusal] <NA> 114 ## 4 -1 [don't know] <NA> 98 ## 5 1 [very] Very 14638 ## 6 2 [fairly] Fairly 49363 ## 7 3 [not very] Not very 41082 ## 8 4 [or not at all interested?] Not at all 38036 ## 9 NA <NA> 51354

We can use a similar strategy for the “sclfsato” variable. This has a few negative values that we will code as missing. Also, this has seven categories, so it might be possible to use them either as continuous or categorical. We can have both versions ready for future use:

usl <- mutate(usl,

sati = ifelse(sclfsato < 0, NA, sclfsato),

sati.fct = factor(sati,

labels = c("Completely dissatisfied",

"Mostly dissatisfied",

"Somewhat dissatisfied",

"Neither sat nor dissat",

"Somewhat satisfied",

"Mostly satisfied",

"Completely satisfied")))

count(usl, sclfsato, sati, sati.fct)## # A tibble: 13 × 4 ## sclfsato sati sati.fct n ## <dbl+lbl> <dbl> <fct> <int> ## 1 -9 [missing] NA <NA> 8371 ## 2 -8 [inapplicable] NA <NA> 11903 ## 3 -7 [proxy] NA <NA> 9079 ## 4 -2 [refusal] NA <NA> 25 ## 5 -1 [don't know] NA <NA> 599 ## 6 1 [completely dissatisfied] 1 Completely dissatisfied 3282 ## 7 2 [mostly dissatisfied] 2 Mostly dissatisfied 6642 ## 8 3 [somewhat dissatisfied] 3 Somewhat dissatisfied 9657 ## 9 4 [Neither Sat nor Dissat] 4 Neither sat nor dissat 11735 ## 10 5 [somewhat satisfied] 5 Somewhat satisfied 21619 ## 11 6 [mostly satisfied] 6 Mostly satisfied 55016 ## 12 7 [completely satisfied] 7 Completely satisfied 14746 ## 13 NA NA <NA> 51354

Cleaning continuous variables

Next, look at a continuous variable and see how we can clean it. We will focus on the “fimngrs” variable, the monthly income. We can explore it using a histogram and some summary statistics:

hist(usl$fimngrs)

summary(usl$fimngrs)

## Min. 1st Qu. Median Mean 3rd Qu. Max. NA's ## -8851.8 640.3 1229.9 1594.6 2083.6 27587.5 51354

It has a couple of issues, like negative values and some very high ones. If we think the negative values are not valid values, we can code them as missing using the ifelse() command.

usl <- mutate(usl, fimngrs = ifelse(fimngrs < 0, NA, fimngrs)) hist(usl$fimngrs)

To improve the distribution, we can also cap very large values. This is a trade-off between precision and a more “normal” distribution. We can create a new variable and decide later which one to use depending on the analysis. The procedure is similar to before:

usl <- mutate(usl, fimngrs.cap = ifelse(fimngrs > 10000, 10000, fimngrs)) hist(usl$fimngrs.cap)

Finally, we can create a logged version of the income. This is useful as income is usually skewed, and the logged version is more normally distributed. We can use the log() command for this. We add 10 to the variable to avoid issues with zero values that would be coded as missing otherwise. The constant value can be chosen by exploring the resulting distribution.

hist(log(usl$fimngrs.cap + 10))

Now we can create the new variable for later use:

usl <- mutate(usl, logincome = log(fimngrs.cap + 10))

We should also check if our time variables are coded correctly. In this data, we have both the wave variable and the year of the interview (“intraday”). Let’s explore them using tables:

count(usl, wave)

## # A tibble: 4 × 2 ## wave n ## <chr> <int> ## 1 1 51007 ## 2 2 51007 ## 3 3 51007 ## 4 4 51007

count(usl, istrtdaty)

## # A tibble: 10 × 2 ## istrtdaty n ## <dbl+lbl> <int> ## 1 -9 [missing] 27 ## 2 -8 [inapplicable] 965 ## 3 -1 [don't know] 1 ## 4 2009 24513 ## 5 2010 41941 ## 6 2011 36841 ## 7 2012 31585 ## 8 2013 15563 ## 9 2014 1238 ## 10 NA 51354

The wave variable has no missing cases but is coded as a character (“chr” in the table). We should recode it as numeric. The “istrtdaty” variable has some missing cases and also is coded as numeric with labels “haven_labelled”. We can recode them as missing using the ifelse() command. We can also recode both variables as numeric:

usl <- usl |>

mutate(wave = as.numeric(wave),

year = ifelse(istrtdaty < 0, NA, as.numeric(istrtdaty)))count(usl, wave)

## # A tibble: 4 × 2 ## wave n ## <dbl> <int> ## 1 1 51007 ## 2 2 51007 ## 3 3 51007 ## 4 4 51007

count(usl, istrtdaty, year)

## # A tibble: 10 × 3 ## istrtdaty year n ## <dbl+lbl> <dbl> <int> ## 1 -9 [missing] NA 27 ## 2 -8 [inapplicable] NA 965 ## 3 -1 [don't know] NA 1 ## 4 2009 2009 24513 ## 5 2010 2010 41941 ## 6 2011 2011 36841 ## 7 2012 2012 31585 ## 8 2013 2013 15563 ## 9 2014 2014 1238 ## 10 NA NA 51354

Now they look fine.

Recoding multiple variables efficiently

So far, we have recorded one variable at a time. Sometimes, we might want to recode multiple variables concurrently. Similar to what we saw in the previous post with renaming variables using rename_at() we can also recode multiple variables.

To see how to do this, we can check two variables that measure health: “sf12mcs” measures mental health, while “sf12pcs” measures physical health. Let’s see how they look:

hist(usl$sf12pcs)

hist(usl$sf12mcs)

These variables are composites, and the values should be between 0 and 100, with averages of around 50. It seems we have negative values for missing categories. Let’s code them as NA. To be more efficient, we can use a special version of mutate() which can be used to apply a particular function to certain variables in the dataset. The command mutate_at() gets three inputs:

- the data

- the variables we want to apply the function to (

vars()). Here, we use the helper functionmatches()to look up all variables that have “sf12” in the name - the function we want to apply to these variables. Here we use

ifelse()with the condition that the variable is smaller than 0 (. < 0) then it should be recoded asNA, otherwise, it should keep the original values (.):

usl <- mutate_at(usl,

vars(matches("sf12")),

~ ifelse(. < 0, NA, .))

summary(select(usl, sf12pcs, sf12mcs))## sf12pcs sf12mcs ## Min. : 4.41 Min. : 0.00 ## 1st Qu.:44.63 1st Qu.:44.72 ## Median :53.46 Median :51.98 ## Mean :49.63 Mean :49.90 ## 3rd Qu.:57.47 3rd Qu.:57.16 ## Max. :74.90 Max. :78.08 ## NA's :76039 NA's :76039

Creating grouped and lagged statistics

Many of the data-cleaning procedures we followed so far can also be used with cross-sectional data. However, longitudinal data has some unique features that require specific cleaning steps. For example, we might want to create individual averages for variables that are measured multiple times. Similarly, we might want to create lagged versions of the variables. We can use the group_by() and mutate() commands to create these new variables.

Before that, let’s talk about the pipe operator |>. This operator is used to chain commands together. It takes the output of the command on the left side and uses it as the input for the command on the right side. This makes the code easier to read and write. We can use the pipe operator to chain multiple commands together. For example, we can use it to create a new variable and also make a table of it:

usl |> mutate(famale = sex - 1) |> count(famale, gndr.fct)

## # A tibble: 2 × 3 ## famale gndr.fct n ## <dbl> <fct> <int> ## 1 0 Male 92364 ## 2 1 Female 111664

This is the same as the following code:

count(mutate(usl, female = sex - 1), female, gndr.fct)

## # A tibble: 2 × 3 ## female gndr.fct n ## <dbl> <fct> <int> ## 1 0 Male 92364 ## 2 1 Female 111664

As you can see, the code with the pipe is much easier to read. We will use this approach quite a bit in the following steps.

Sometimes, we want to create individual-level statistics. For example, we might want to create an individual-level average for the satisfaction variable. We can use the group_by() command to group the data by the person identifier pidp. Then, the mutate() command will create new variables that are the average of the original variable. We can also create a deviation variable, which is the difference between the original variable and the average. We can do this for the satisfaction variable and the two health variables:

usl <- usl %>%

group_by(pidp) %>%

mutate(sati.ind = mean(sati, na.rm = TRUE),

sati.dev = sati - sati.ind,

sf12pcs.ind = mean(sf12pcs, na.rm = TRUE),

sf12pcs.dev = sf12pcs - sf12pcs.ind,

sf12mcs.ind = mean(sf12mcs, na.rm = TRUE),

sf12mcs.dev = sf12mcs - sf12mcs.ind) |>

ungroup()Remember to ungroup() the data, otherwise future data cleaning actions will also be done separately for each group.

We can check how the new variables look for satisfaction:

usl |> select(pidp, wave, sati, sati.ind, sati.dev) |> head()

## # A tibble: 6 × 5 ## pidp wave sati sati.ind sati.dev ## <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 5 1 NA 3 NA ## 2 5 2 6 3 3 ## 3 5 3 2 3 -1 ## 4 5 4 1 3 -2 ## 5 6 1 6 5 1 ## 6 6 2 6 5 1

We see that, for example, for an individual with “pidp” = 5, the average satisfaction is 3. We also see that the deviation score tells us if that particular wave was better or worse than the individual average. These variables can be useful in understanding complex relationships in the data.

Let’s look at another example. We have worked with unbalanced data so far. Imagine you want to find out how many waves someone participated in. We can use the sum(present) command to count the number of waves someone was present and by combining that with the group_by(pidp) command, we can do this at the individual level.

This command works because even though “present” is coded as logical, R automatically converts TRUE to 1 in the background.

usl <- usl %>% group_by(pidp) %>% mutate(waves = sum(present, na.rm = T)) |> ungroup() count(usl, waves)

## # A tibble: 4 × 2 ## waves n ## <int> <int> ## 1 1 40724 ## 2 2 26932 ## 3 3 29380 ## 4 4 106992

If we wanted to create a balanced dataset, we could use the filter() command to keep only the cases present in all the waves, which now would be individuals with “wave” = 4.

Another type of recoding we may want to do with longitudinal data is lagging. This means getting a value from a previous wave and adding it to the current wave. For example, this can be useful in investigating if health in the previous wave impacts productivity in the current wave. For this, we can use the lag() command. We can use the group_by() command to group the data by the person identifier pidp and then use the mutate() command to create lagged variables. We can do this for the satisfaction and the two health variables:

# Create lagged variables

usl <- usl %>%

group_by(pidp) %>%

mutate(sati.lag = lag(sati),

sf12pcs.lag = lag(sf12pcs),

sf12mcs.lag = lag(sf12mcs)) |>

ungroup()We can check how the new variables look:

usl |> select(pidp, wave, sati, sati.lag, sf12pcs, sf12pcs.lag, sf12mcs, sf12mcs.lag) |> head()

## # A tibble: 6 × 8 ## pidp wave sati sati.lag sf12pcs sf12pcs.lag sf12mcs sf12mcs.lag ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 5 1 NA NA 54.5 NA 55.7 NA ## 2 5 2 6 NA 63.0 54.5 36.2 55.7 ## 3 5 3 2 6 57.0 63.0 60.0 36.2 ## 4 5 4 1 2 56.2 57.0 59.0 60.0 ## 5 6 1 6 NA 53.9 NA 46.5 NA ## 6 6 2 6 6 46.4 53.9 45.4 46.5

As expected, the values have shifted down by one wave. This also means that the new values have missing information on the first wave. This is because there is no previous wave to take the information from.

Clean up the data and reshape

We have finished doing most of the data recoding we wanted to do. Now, we can remove the variables we do not need anymore using the select() command. We can use the “-” sign to exclude variables.

usl <- select(usl,

-c(sex, urban, vote6, vote6.rec))We can also create a wide version of the data. This is useful for some types of analysis. You can see the previous post for a discussion on how to use this command.

usw <- pivot_wider(

usl,

values_from = !c(pidp, gndr.fct, age, hiqual, wave,

degree.fct, sati.ind, sf12pcs.ind, sf12mcs.ind, waves),

names_sep = "_",

names_from = wave

)Finally, we can export both the long and wide data for future use:

save(usl, usw, file = "./data/us_clean_syn.RData")

Conclusions

We have covered the most common commands used to clean longitudinal data. This included recoding categorical and continuous variables, creating individual-level statistics, and lagged variables. We have used the pipe operator to make the code more readable and efficient, and the group_by() and mutate() commands to create new variables at the individual level.

Data transformation and data cleaning are essential steps in the data analysis process. They can be time-consuming but are crucial for the quality of the analysis. We hope this guide has given you a good understanding of how to clean longitudinal data in R. You are now ready to move to the next stage, which is exploratory data analysis. You can check how to do that using tables and summary statistics in this blog post and how to do graphs in this post. You can adapt the process covered in this post and the previous one to your own data. Any questions or comments are welcome in the comment section below!

Was the information useful?

Consider supporting the site:

4 responses to “Cleaning longitudinal data in R: a step-by-step guide”

[…] you want to follow along you can download the data we cleaned in a previous post. Remember to set up your working directory as previously described. Next let’s load the […]

[…] the variables and use tables and visualization to explore it. We cover these next stages in this blogpost and this […]

[…] explore longitudinal data using R. We will apply these techniques to the synthetic data from our previous post that replicates a large social science panel study. Visualization complements the exploration […]

[…] – Cleaning longitudinal data in R: a step-by-step guide […]